I find very little value in trying to predict the future, but there is something about the beginning of a new year that brings out the crystal balls and tea leaves in all of us. We all want to prove our expertise by predicting new SEO trends. The thing is very few people go back and determine which predictions came true and which did not, but when someone did make an accurate prediction, you can bet that person will let you know about it – over and over.

Blue Magnet does have clients asking us about the SEO trends of 2018, compelling me to write about what SEOs are seeing and how it relates to hospitality marketing.

These are not predictions. They are extrapolations on current SEO factors and issues. To put it simply, this is what I am seeing in terms of SEO right now and in the near future. These are the SEO issues Blue Magnet is addressing with our clients over the next few months to fully prepare their sites to take advantage of these trends.

The search environment will have no major disruptions.

I know many people desperately want SEO to be dead for a variety of reasons. Mainly, I think, so they never have to hear terms like “link juice”, “trust flow”, and “SERP” ever again.

We have to remember what the basis of SEO actually is – maximizing your website’s exposure to people doing searches where your website is a relevant result and doing this within the terms of service established by the search engine. This kind of optimization will never go away.

Yes, the search engines will get better at understanding the intent and context of the content, but at the end of the day, the search engine will have to make a judgment call on how to order the results on the search engine results pages, thus a need to optimize your content to align with criteria (also known as ranking factors) the search engine is using.

In the past, we have seen shakeups in the search ecosystem with Google updates like Panda and Penguin. The thing is we know Google makes over 500 updates to the search algorithm a year and most of these go unnoticed by most webmasters, yet a peek at Mozcast shows there is volatility in the SERPs. We will not see major disruptive changes by Google. We will see hundreds of tiny changes that may or may not affect our sites.

2018 Search Environment

What does the search environment look like in 2018? A lot of the same. Gary Illyes from Google stated back in 2016 RankBrain is the 3rd largest ranking factor, leaving content and links as the top 2. The strategy for websites will not change. Provide valuable content for the searcher and be recognized as a relevant authority on the topics you are hoping to rank for.

5 Stages of Travel

- Dreaming

- Planning

- Booking

- Experiencing

- Sharing

In terms of hospitality marketing, this means having relevant, valuable content related to every stage of travel on the website and getting links from other authoritative sources on the topics the website wants to rank for.

The stages of travel are micro-moments in the decision process where a marketer has an opportunity to influence the traveler. Each stage does not lead directly to a reserved room but is part of a funnel, leading the potential guest from the moment they are contemplating a trip and where to stay to the moment immediately after where they are sharing their experience via social media.

In 2018 these micro-moments will stay the same. There may be shifts in platforms as new social media services become prevalent, but targeting travelers in these 5 stages will not change. The strategy will still work in 2018.

Circumvention of Google as a search agent.

Microsoft, Apple, Facebook, and Amazon all want Google deposed as the de facto gatekeeper of the web. Microsoft’s Bing search engine barely tops out at 7% of the searches performed according to Netmarketshare. Going after Google head-on is not working for any of these companies.

Where they are seeing traction is by bypassing Google entirely. I interact with Amazon through the Amazon Echo device on a daily basis. When I am looking to buy something, I search on Amazon, not on Google. Google is losing out on data. Luckily for Google, I am still signed in on my Google account while using my Chrome browser so they still get some data from me.

Voice Search

In 2016, Greg Sterling at Search Engine Land reported Google CEO Sundar Pichai announced at the Google I/O keynote, “20 percent of queries on its mobile app and on Android devices are voice searches.” Voice search is happening and with Echo, Siri, and Cortana all out there, in readily available devices, Google is no longer the default gateway for search.

In the Google ecosystem, Dr. Peter Meyers from Moz demonstrated using Google Home voice search results are linked to the rich snippets appearing on the SERP.

That is great for people using Google devices, but what about everyone else? Cortana on Windows and Xbox, Siri on iPhones, and Alexa on Echo and other Amazon devices all rely upon Bing to provide the answers to the voice search queries. As these companies grow their own search ecosystems, more and more searches will bypass Google. Meanwhile, Google also faces the issue of losing out on ad revenue from voice searches performed on its devices.

Chatbots

There are a lot of technical definitions of what a chatbot is and is not. I found very few of them truly helpful in trying to talk about chatbots in relation to SEO and digital marketing. For ease of discussion, a chatbot is a program which takes natural language input to access a database and deliver a relevant response.

For example, a person could ask a chatbot, “what is the temperature in Great Falls, MT?” The chatbot then translates the entry into a query for a database and gives the result back to the person typing.

In many cases, the chatbot can collect data from the person typing, asking for a name, location, and other information to fill in a form.

There has been a serious upswing in the interest of using chatbots for marketing.

Chatbots can be attached to Facebook, Slack, and other messenger platforms people are already using, which makes them a very personal way to connect with potential customers.

Domino’s Pizza launched a chatbot on Facebook at the beginning of 2017 which allowed people to order their pizza through the messenger. Is this easier than using the website? That is for the customers to decide, but the fact is, Domino’s and other companies are investing in this type of marketing. By creating these personal, specialized chatbots, they are forging a direct connection with the customer, bypassing Google, and bypassing competitors’ ads that may appear on the SERP.

Google Peeks at Chatbot Data with Chatbase

How do we know Google sees chatbots as a potential threat? At Google I/O this year (2017), Google announced a new analytics package for chatbots called Chatbase. Chatbase can be used by companies using chatbot marketing to analyze and optimize their chatbots. Much like Analytics simplified reporting on how searchers were using your website, while giving Google access to the same data, Chatbase will provide Google data it was otherwise locked out of.

We know the hospitality industry cautiously adopts new technology, but according to Shayne Paddock, the Chief Innovation Officer for TravelClick’s Guest Management Solutions product, hotels need to embrace chatbots. “The beauty of chatbots is that they free up hoteliers’ time so they can give more attention to facilitating unique or detailed requests…,” Shayne Paddock said in an October 26, 2017 interview with WebCanada.

The beauty of chatbots is that they free up hoteliers’ time so they can give more attention to facilitating unique or detailed requests…

-Shayne Paddock

Hotels will not rush to replace friendly staff with chatbots, but I do see them trying to keep up with all the ways their guests are trying to communicate with them. A quick survey of Blue Magnet’s social media managers on what kind of questions people are asking the hotel on Facebook revealed roughly 5% to 18% could be handled in an automatic fashion.

These are simple questions about check-in and check-out times, hotel policies, and other basic facts which could easily be referenced by a bot and an answer provided immediately.

Chatbots Answer the Simple and Repetitive Requests

Shayne Paddock clarified in his interview chatbot and AI technology is not about removing the human element. Hospitality will always be about the human level service, but by allowing technology to solve the simple day-to-day problems, staff will have more time to focus on the complex problems a chatbot would not be able to help with.

The hospitality industry may not be on the cutting edge of marketing technology, but it will start to adopt these innovations and further bypass Google’s search. Potential guests will not ask Google about check-in times, hoping Google has found the answer on the hotel’s website somewhere; instead, they will ask the hotel’s chatbot (much the same way they are already asking the hotel’s Facebook page or Twitter account) and get an immediate, accurate response.

According to Blue Magnet Social Media Managers, 5% to 18% of questions asked on social media could be handled by a chatbot.

2018 will see the first iterations of chatbots being used by hotels to assist their guests. We may see them roll out at the brand level, as the development cost may be steep. There are some great plug and play type bots out there which interface with Facebook, Slack, and other messenger platforms that could be cobbled together by an independent hotel, so we may not know where this innovation will come from, just that it is coming.

In 2018 we will continue to see Microsoft, Apple, Facebook, and Amazon use new technologies and devices to circumvent Google as the de facto gatekeeper of the web. Google will continue to explore new ways of monetizing search and getting access to data not going through one of its services.

What this means for hoteliers and hospitality digital marketers is we might see traffic from Google organic decrease and organic from Bing and other sources increase. Not in a cataclysmic way, but in subtle shifts. Good hospitality marketers need to be ready to move into new channels potential guests start using to make their travel and accommodation arrangements.

Continued growth of rich snippets on the SERP.

I have already mentioned rich snippets, also referred to as featured snippets. Rich snippets appear on Google’s search engine results pages taken from one of the organic results. The snippet attempts to use the content from the website to provide the immediate answer to the searcher’s query without the searcher having to visit the site.

These types of snippets can be controversial. If you have built your website around answering specific queries and want to monetize the traffic from those queries, Google can take away all your traffic and your money by including your data in their rich snippet.

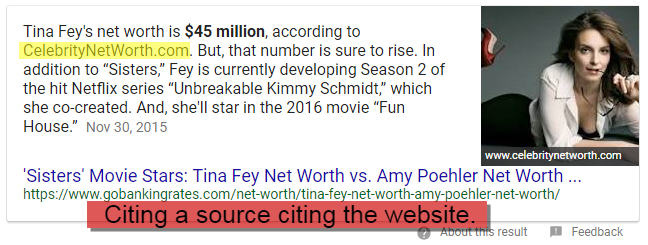

The website celebritynetworth.com blames Google’s snippets for slowly killing its business. The write-up of the drama between Google and Brian Warner is not exactly a techno-thriller but it is a case study in the power Google has over information. The relationship went from Google asking for access to their data to Google just scraping it.

The unfortunate thing was the data was easily boiled down to one number. Yes, Brian Warner put a lot of work into compiling the number, but once it was done, it was easily copied from the website by Google or when cited by another site, still used by Google in a featured snippet. It was also a small enough chunk of data that exactly answered the question “how much is this celebrity worth?”

Rich Snippets Continue to Grow

Google shows no sign of changing their tactics when it comes to these featured snippets on the results pages. For many businesses relying on web traffic, these snippets actually help drive traffic. Moz and others in the search industry are calling it Position 0 because it comes before all other organic results, and Google is not relying on the website in the 1st position to provide the answer. Google will take the information from any site that is on the 1st page that has formatted the information in a way Google can use. This is a huge break for those sites that might never get to position 1 but can be on the 1st results page.

Featured Snippets… come before traditional organic results. This is why I have taken to calling them the “#0” ranking position. What beats #1? You can see where I’m going with this… #0.

-Dr. Peter Meyers, Ranking #0: SEO for Answers

Optimizing for the snippet is the new SEO trick. I do not think anyone has cracked the code on how to consistently get sites featured in the snippet, but Moz and SEMRush are offering features in their tools to help identify which keywords are generating the rich snippet on the SERP. If your webpage is appearing on the first page of search results but is not capturing the snippet, there is opportunity.

Optimizing the content to get the snippet is one of the new challenges SEO is facing. There is a lot of testing going along with this, and we are trying to address the difficulty of going after snippets on brand.com pages.

The tight control brand.com places on the content on hotel pages on their sites often prohibits the type of formatting which would allow the hotel page to be featured in the snippet. I believe it will be possible, in some instances, for brand.com pages to capture Position 0, but it is less of a challenge with an independent website where schema and unique formatting can be applied to the content.

An additional consideration is the type of content brand.com has on their pages versus the type of content an independent website has. Brand.com focuses more on bottom-of-funnel search terms which are less likely to generate featured snippets.

A continued decrease in the effectiveness of Black Hat tactics.

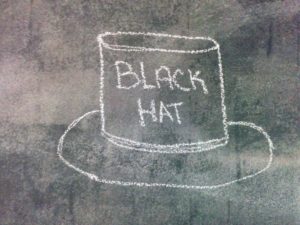

Black Hat, at least in the way I am about to use it, refers to tactics and activities violating the search engines’ terms of services. These are activities we associate with spam, low-quality content, and exploitative behavior.

Black Hat, at least in the way I am about to use it, refers to tactics and activities violating the search engines’ terms of services. These are activities we associate with spam, low-quality content, and exploitative behavior.

Sometimes Black Hat tactics are illegal or immoral, but some, like paying someone for a backlink, guest posts on blogs, and using exact match anchor text in links on a distributed webtool are simply against Google’s terms of service and are cause for a lot of consternation and hyperbolic discussion in web forums.

Back in 2009, Ann Smarty, the brand manager for Internet Marketing Ninjas, ran a service helping connect guest bloggers to guest blogging opportunities. It was a boon for SEO people trying to scale this solution for backlinks. Matt Cutts and the team at Google did not see it that way and in 2014 took action against her service.

I cite this case only to show Black Hat is not always about illegal and immoral practices. Black Hat is just sometimes what Google or other search engines declare is in violation of their terms of service. Some of these tactics come from unforeseen consequences in Google’s own way of doing things. By placing so much weight on links, Google encouraged people to game the algorithm which made Google force webmasters to add code to links to designate whether it was a do-follow or no-follow link.

Black Hat Is Not Dead, Just Diminishing

Pretty much every month, in the Black Hat forums on the web (there is nothing dark and sinister to these places, they are usually where affiliate marketers gather to discuss strategies), there is someone who boldly proclaims Black Hat is dead. She will cite several examples and then someone else will cite her set of examples where Black Hat still works.

In saying we will see a continued decrease in the effectiveness of Black Hat tactics, I am not proclaiming Black Hat SEO is dead. There will always be a way to bypass the terms of service to exploit a loophole the search engine has not yet closed. What I want to make note of is the effectiveness is decreasing, the risk is higher, and the results are diminishing.

Once a person could set up something called a “Private Blog Network”, or a PBN. These would be high authority sites where the owner takes great pains to keep the fact they are all owned by one person from Google. This means using no Google products in association with the sites, using different content management systems, different themes, different navigation structures, registering the domains under different identities and registering them with different registrars…

It is a tiring process and once yielded strong results.

It takes longer to do it wrong and fix it than it does to do it right the first time.

-my dad

Now, Google has gotten better at detecting PBNs and is shutting them down. The cost in money and time in setting up a PBN is pretty high. Which means the risk associated with it shutting down only increases. The effectiveness has been diminished.

There are still Black Hat SEOs out there claiming they can spin up a PBN and use it to rank websites, but the demonstrable results are slim. The PBNs still working are carefully guarded and are still a reason why anyone working with an SEO company needs to know exactly how backlinks are being created. Having your site linked to from a PBN that gets taken down by Google is bad.

Black Hat Includes Negative SEO

Which actually leads to Negative SEO tactics. Sometimes these PBNs that are already penalized create backlinks to competitor websites to get them penalized by Google.

This tactic was already sketchy, but Google has made it pretty clear they are not even considering those low quality links anymore. When an SEO goes to the Google Disavow Tool to tell Google to ignore low quality links pointing to her website, she sees this message: “In most cases, Google can assess which links to trust without additional guidance, so most normal or typical sites will not need to use this tool.”

These Black Hat SEO tactics are becoming less and less effective. We still need to monitor the links. We still need to worry about competition using a technique that catapults them up the rankings. We still need to care about how we are optimizing our sites, writing our content, and acquiring our links to make sure our tactics are not in violation of Google’s TOS.

We also need to think in terms of sustainable SEO tactics. If we think we have discovered a loophole, we should be aware Google will eventually close that loophole and our sites might suffer a penalty because of it. Sustainable SEO looks beyond an immediate short-term gain in order to build up a site over time for long-term gains.

A greater focus on user experience.

We started 2017 with articles like this written by David Freeman from Search Engine Land, Why UX is pivotal to the future of SEO which gave us insights like this, “agencies and marketers must broaden their SEO approach by placing a much greater focus on UX across the full range of owned assets.”

At the beginning of 2017, the fact user experience was going to play a role in SEO was well known. We had already had hints of it in Google’s implementation of RankBrain. RankBrain was taking into account all sorts of data and metadata which were not previously included in ranking webpages.

At the beginning of 2017, the fact user experience was going to play a role in SEO was well known. We had already had hints of it in Google’s implementation of RankBrain. RankBrain was taking into account all sorts of data and metadata which were not previously included in ranking webpages.

Brian Dean at Backlinko.com has already done a write-up of 2018 trends and also points to user experience as a factor due to RankBrain.

“RankBrain focuses on two things: 1. How long someone spends on your page (Dwell Time) 2. The percentage of people that click on your result (Click Through Rate).”

So I disagree that these two things are the focus of RankBrain but they are most likely included in RankBrain. Website marketers are talking about a specific kind of bounce we are calling pogosticking.

Pogosticking is when a searcher clicks on your site from the SERP, lands on your page, returns to the SERP and selects a different site. It is a very specific type of bounce that webmasters cannot account for since we do not know what the searcher does once they leave our sites. We look at the analytics and look for very short dwell times on pages where we expect the visitor to spend longer than a few seconds.

Bounce Rate as KPI

If we go back to 2007, Avinash Kaushik, digital marketing evangelist for Google coined a phrase about Bounce Rate on-page conversion optimizers have been repeating on their blogs and in their presentations ever since.

If you are not in a video watching mood, here is the pertinent quote paraphrased.

Bounce rate is the web visitor saying “I came, I puked, I left”. When someone abandons the page moments after it loaded, she is not only bouncing, but sending Google a signal that this experience did not meet her intent when she made her search.

The bonus point of information in the video is Avinash tells you what a good conversion rate for a site is and what a good bounce rate for a site is. Okay, if you really do not want to watch the video, I will share it with you. Keep in mind this is 2007 so there are some adjustments for improvements in site technology and implementation of conversion optimization techniques, but a good conversion rate is 2% and a good bounce rate is between 40% and 60%.

I like to see bounce rates on top-of-funnel and middle-of-funnel pages to be in that range and lower for bottom-of-funnel pages.

7 Factors of User Experience

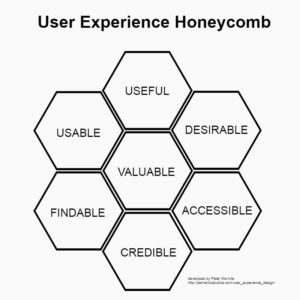

Back to User Experience, there are discussions trying to figure out exactly what is included. There are technical factors, design factors, and content factors involved with UX. In 2004, Peter Morville, President of Semantic Studios, formulated a new structure to illustrate User Experience. He called it the User Experience Honeycomb.

The seven elements included in this structure are:

- Useful – the webpage needs to be useful to the site visitor.

- Usable – the webpage needs to be intuitively used by the site visitor.

- Desirable – the webpage needs engage emotionally with the site visitor.

- Findable – the webpage needs to be able to be located by the site visitor.

- Accessible – the webpage needs to be able to serve site visitors with disabilities.

- Credible – the webpage must present itself as worthy of the site visitor’s trust.

- Valuable – the webpage must deliver value to the site owner.

Most of these elements are self-explanatory. I do want to pause on the credible element and elaborate a bit more. Often digital marketing people will talk about credibility in terms of adding social proof and testimonials to a page. For the most part, that is fine, but we can dig a bit deeper on the topic.

Most of these elements are self-explanatory. I do want to pause on the credible element and elaborate a bit more. Often digital marketing people will talk about credibility in terms of adding social proof and testimonials to a page. For the most part, that is fine, but we can dig a bit deeper on the topic.

Stanford University

Stanford University has the Web Credibility Project which did research into what design factors make a webpage credible.

- Make it easy to verify the accuracy of the information you are providing by linking to citations.

- Show there is a real organization behind your site by providing a physical address and other proof you are real.

- Highlight the expertise in your organization, your content, and services by presenting team member credentials and affiliations with respected organizations.

- Show honest and trustworthy people are behind your site by showing your team.

- Make it simple to contact you by presenting phone numbers and emails in a clear way.

- Make the site look professional through consistent branding and styles.

- Make your site easy to use with clear navigation and make it useful to people.

- Update your site often in obvious ways.

- Keep ads and promotional content to a minimum.

- Keep errors to a minimum, including typos, spelling errors, and broken links.

You can see there are some points overlapping with the User Experience Honeycomb. All of this is in the same ecosystem of user experience and while this research was done over a decade ago, these elements are going to become even more important in 2018.

In the next coming months, we are going to see more effort put into making sites universally accessible, a reduction in weird navigation that turns the website into a puzzle, and a simple style and layout that makes the page intuitive right from the start.

These design elements will improve dwell time, reduce bounce rates, and improve the number of pages visited which will result in improved presence in search for the terms the site is relevant for.

Pulling All the SEO Trends Together AKA TL;DR

The 2018 SEO trends I see affecting the hospitality industry are as follows:

No major search engine disruptions.

Google will make countless changes to how it displays search results and figures out which results to display in 2018, but these will be microchanges and not huge industry ending changes. We have no reason to suspect we will need to drastically alter marketing strategies like the SEO industry had to under Panda and Penguin updates.

Google will lose ground on organic search.

With the increased use of voice search on devices not routed through Google and additional technologies like chatbots Google will lose ground on organic search. Digital marketing for hotels will need to address this by optimizing for voice search and by exploring other channels in which their guests want to connect with them. Not every hotel needs a chatbot running on a Slack channel, but some might.

Google will rely more and more on rich snippets.

While I said earlier I do not expect a major disruption in the strategies we employ for SEO in 2018, I do expect Google to continue to transform the search engine results pages. We’ve already seen, at the end of 2017, changes to how Google is treating meta descriptions. By increasing the length of the meta description, Google as effectively lengthened the SERP which means even more scrolling to see the results at the bottom of the page.

Blue Magnet is putting together a plan of action for this change but it is not a complete disruption of our current strategy. We are redoing how we write our meta descriptions for hotel websites. This is in addition to the work we are already doing to try to capture Position 0, getting hotel websites into the featured snippet on the SERP.

Black Hat SEO tactics will have diminishing returns.

I have not seen much Black Hat in the hospitality vertical, but as competition increases there may be some who are persuaded to try tactics promising instant success. Link acquisition for hotel websites has become difficult as all the easy opportunities have already been acquired. This makes ‘schemes’ and ‘exploits’ very enticing. Maybe you start to think it is worth spending some money to get links on ‘relevant blogs’.

It is not worth it. Do not do it. For long term sustainable growth of your hotel website, focus on providing unique and valuable content addressing top-of-funnel searches, middle-of-funnel searches, and bottom-of-funnel searches while becoming more engaged with your local community.

User experience will grow in importance for SEO.

RankBrain is a black box around which many conspiracies can be formed. I will not pretend I fully understand the depth of machine learning but I do know many people do believe RankBrain is taking certain user signals into consideration. The most common theories are bounce rate and time on site are two critical quality signals.

I have always felt bounce rate depended on the page. If the bounce rate of my contact page is high, I do not worry too much about it. The purpose of being on the page is to get my email address, mailing address, or phone number. A high bounce rate on a page in a critical part of the conversion funnel for the site is bad and needs to be addressed.

Time on page and the average number of pages served are also big indicators of engagement for me. Someone who landed on the page, did not puke, and stayed to see more are valuable to me. As long as she was not clicking around lost on the site, but following the conversion path laid out for her, this is good. She was engaged with the content. The next time she does a search for which the site is relevant, due to how personal search works, the site will be given greater authority for her and appear higher in the rankings.

Top-of-funnel or awareness traffic might not convert on first visit while the potential guest is in the dream stage of travel, but will enable conversion when the potential guest is in the planning and booking stages of travel. Her previous engagement on the site during a more generic search makes the site rank higher for her when she does more specific, consideration or action, type searches.

I do not like to say what Google wants to see or does not want to see, but having a site where visitors remain, read, and explore is good in and of itself. If it does turn out RankBrain does factor in these types of user signals and it helps site rankings, that is icing on the cake.

These are not ground shaking predictions for 2018, I admit. As I mentioned at the start, I try to avoid predicting the future since there is enough to focus on in the present. It is fun to speculate though.

To stay up to date on Blue Magnet’s marketing strategies for hotels, please join the 1,300+ hoteliers who receive our quarterly newsletter.